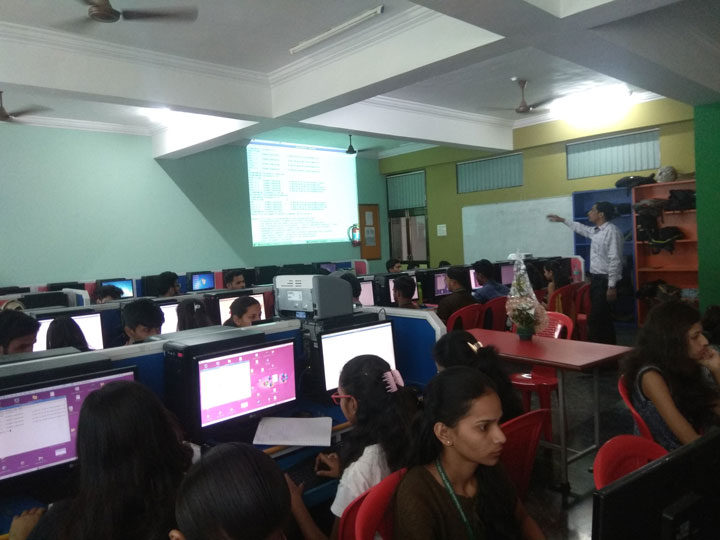

IT Department organised One Day Workshop on “Hadoop and Big Data” on 9th March, 2019. In this workshop there were 38 participants. Hadoop is an open-source software framework for storing and processing big data in a distributed fashion on large clusters of commodity hardware. Essentially, it accomplishes two tasks: massive data storage and faster processing. Since Hadoop has become one of the most talked about technologies one of the top reasons is its ability to handle huge amounts of data, any kind of data quickly.

Department

Time

Venue

Co-ordinator

Speakers

Computer Engineering and Information Technology

9.30 a.m. to 4.00 p.m.

Lab No. 1, Second Floor, Architecture Building

Amol Karande

Likhesh Kolhe from Terna Engineering College, Nerul

Exponential growth of data in electronic form with its volume, variety, and velocity has brought in “Big Data and Hadoop” as a topic of its own. This explosion challenges the current data mining techniques that are based on structured data. Studies show that over 80% of data is unstructured. If analytics is one challenge, security related aspects in terms of privacy and integrity is yet another challenge. This course aims to throw light on Big Data challenges and techniques that enable analytics.

In this workshop the following point to cover:

- Study of Hadoop ecosystem

- Programming exercises on Hadoop

- Programming exercises in No SQL

- Implementing simple algorithms in Map- Reduce (3) – Matrix multiplication, Aggregates, joins, sorting, searching etc.

- Implementing any one Frequent Itemset algorithm using Map-Reduce

- Implementing any one Clustering algorithm using Map-Reduce

- Implementing any one data streaming algorithm using Map-Reduce